Exploring Disentangled Feature Representation

Beyond Face Identification

Yu Liu* Fangyin Wei* Jing Shao* Lu Sheng Junjie Yan Xiaogang Wang

In CVPR 2018

Paper | Poster | Caffe code | Caffe model

Abstract

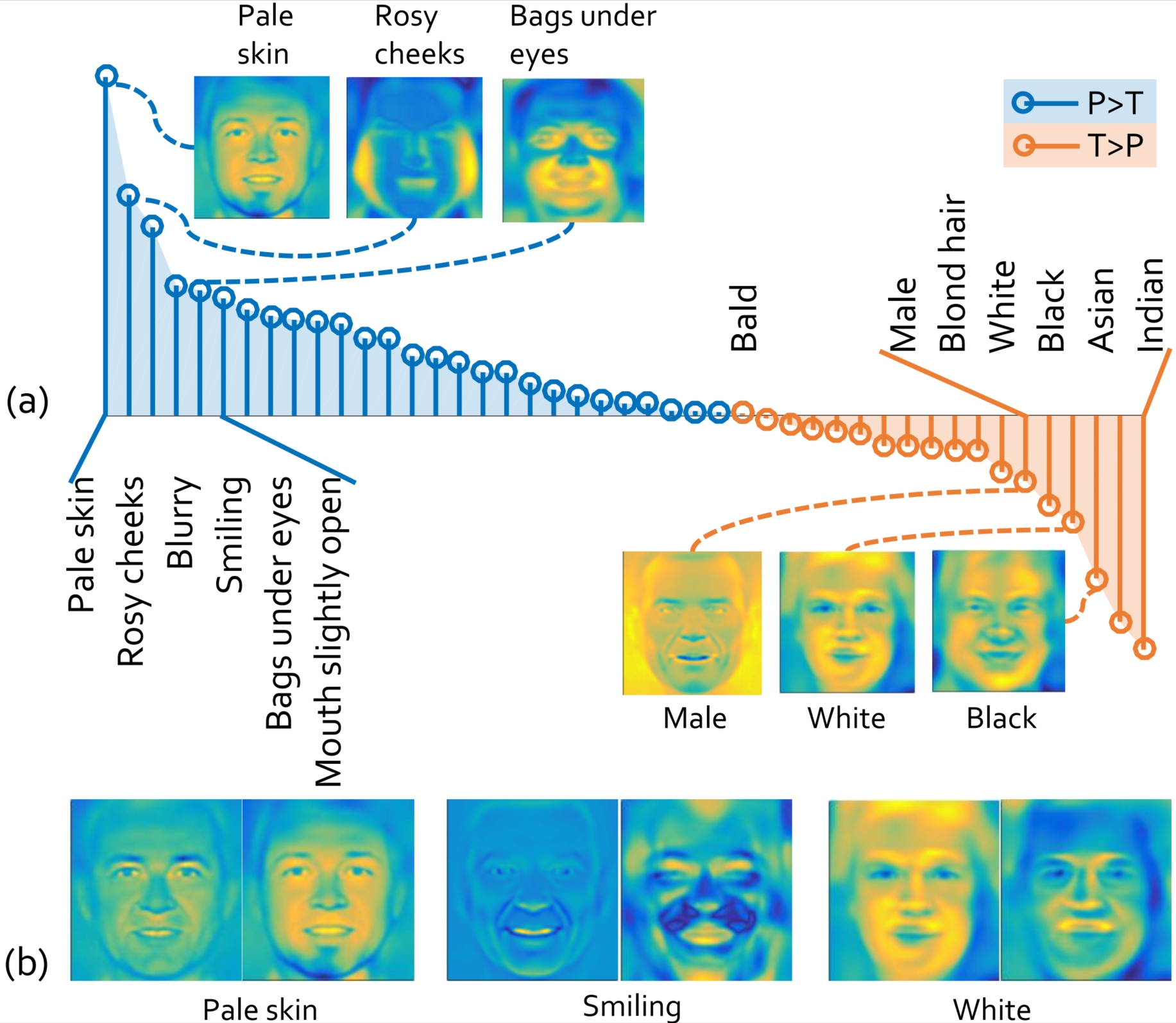

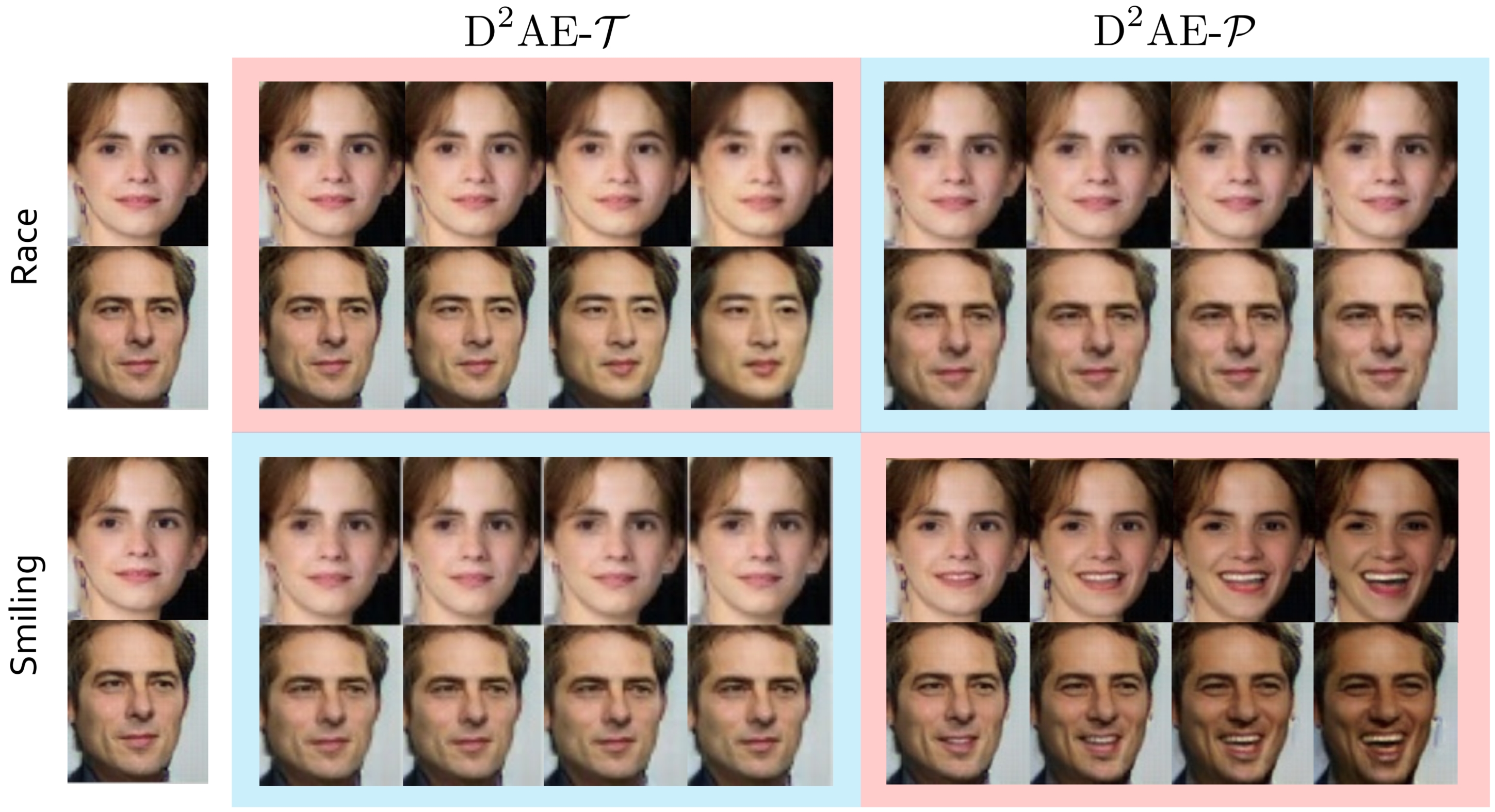

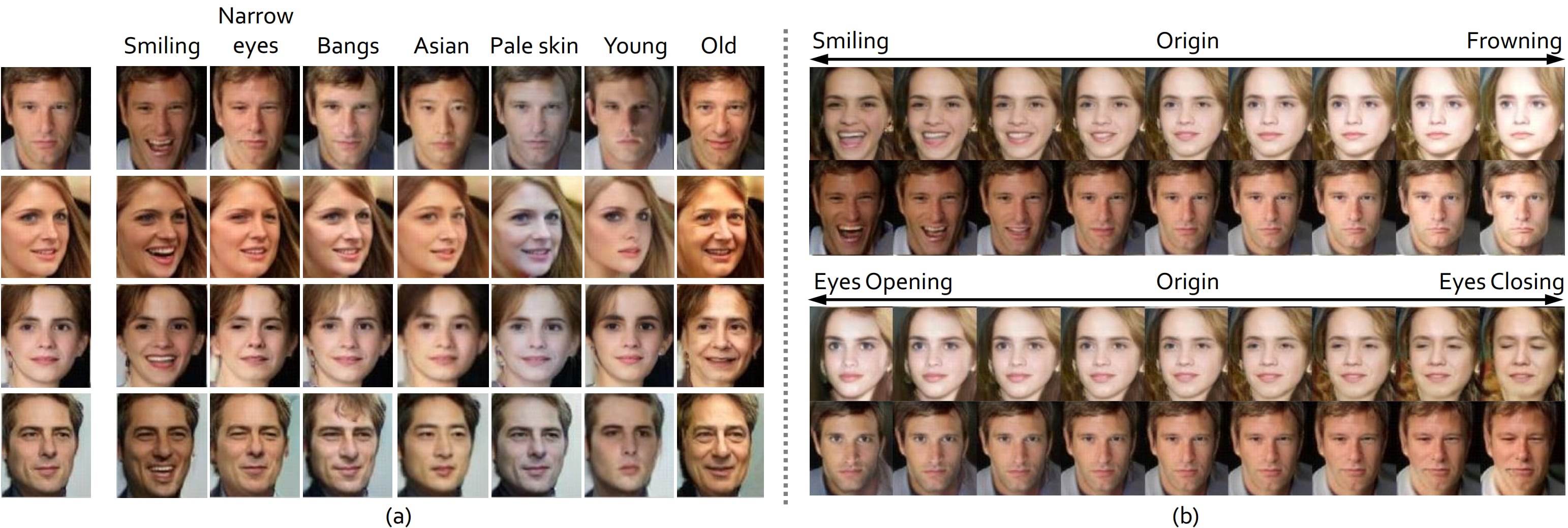

This paper proposes learning disentangled but complementary face features with minimal supervision by face identification. Specifically, we construct an identity Distilling and Dispelling Autoencoder (D2AE) framework that adversarially learns the identity-distilled features for identity verification and the identity-dispelled features to fool the verification system. Thanks to the design of two-stream cues, the learned disentangled features represent not only the identity or attribute but the complete input image. Comprehensive evaluations further demonstrate that the proposed features not only maintain state-of-the-art identity verification performance on LFW, but also acquire competitive discriminative power for face attribute recognition on CelebA and LFWA. Moreover, the proposed system is ready to semantically control the face generation/editing based on various identities and attributes in an unsupervised manner.

Citation

Yu Liu*, Fangyin Wei*, Jing Shao*, Lu Sheng, Junjie Yan, and Xiaogang Wang. "Exploring Disentangled Feature Representation Beyond Face Identification", in Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018.

(* indicates equal contributions)

Bibtex

@InProceedings{liu2018exploring,

title={Exploring Disentangled Feature Representation Beyond Face Identification},

author={Liu, Yu and Wei, Fangyin and Shao, Jing and Sheng, Lu and Yan, Junjie and Wang, Xiaogang},

booktitle={IEEE Conference on Computer Vision and Pattern Recognition},

year={2018}

}

More visualized results

Presentations and related news

June 19th, 2018: We are presenting our poster at M22 in Hall D of the Calvin L. Rampton Salt Palace Convention Center in Salt Lake City, Utah.